Don’t rip out the battery, that’s free UPS!

Don’t rip out the battery, that’s free UPS!

If there’s nothing utterly specific from the nextcloud ecosystem that you absolutely need, no, Synology has you covered

You seem to be obsessed with optimising one resource at the expense of others.

If you want to push it and paint me as obsessed about something, then let it be this: providing this community with on-topic and reasonable advice

you’re only going to save a few MB of RAM.

This is false, and you should read once again my previous message illustrating why: on a decent “self-host”-friendly machine, the same software may work very well, or not at all, depending on whether the user would engage with very basic configuration. This goes beyond RAM (memory isn’t the sole shared resource), and I’m adamant that the alternative (which was “pretending that the problem doesn’t exist” turned into “throwing money at the problem”) is unreasonable.

On the other hand, if you’re doing it to learn more about computers then it might be worthwhile. This is a community of hobbiests, after all…

Or more importantly: the extent to which you can self-host out of sheer luck and ignorance like you suggest is very limited. If you don’t want to engage with a minimum amount of configuration, you might bump into security issues (a much broader and complex subject) long before any of the above has a material impact.

I’m saying this based on real world experience

And do you think I would spend my time engaging if that wasn’t from my own very “real world experience” of lessons learned the hard way?

Bringing-up “diminishing returns” as if this was an optimisation game also doesn’t do this justice. Take the typical “household FOSS package” with software names often brought up in here: a nextcloud instance, a photo-sharing service like immich, private instant messaging, a software forge, a subsonic-compatible audio/video streaming server, a couple php websites like wallabag and RSS aggregators.

An Intel Atom CPU and 4GB of RAM is plenty sufficient for all that, and will cost you single digit USD a month, granted you put the (one-time) effort to tune and balance those services. Would you run all the above from upstream’s docker files, I can guarantee you that you would deem this (perfectly fine otherwise) server underpowered for the task at hand (and would probably go for a 10th gen or so Intel Core CPU, quadruple the RAM and 3-6× the energy cost in the process).

And that’s the point I’m making here: a self-hosting community of tinkerers should (ideally) know better, for the ethics’ sake of keeping the process environmentally friendly, and not wasting other people’s money.

Do you have the data to back that up?

I mean, you are the one making the exceptional claim that unnecessarily running multiple instances of programs on a device with finite resources has no practical adverse effect. Of course, the effects can be more or less drastic depending on the many variables at play (hardware, software, memory pressure, thread starvation, cache misses, …) and can indeed be negligible in some lucky circumstances. The point is that you don’t call that shot, and especially not by burying your head in the sand and pretending it’s never gonna be a problem.

Effective use of computing resources requires tuning. Introduction of a new service creates imbalance. Ensuring that the server performs nominally and predictably for all intended services is a balancing act and a sysadmin’s job. Services whose deployment settings are set by someone with no prior knowledge of the deployment constraints can’t be trusted to do a good job at it (that’s the nature of the physical world we live in, not my opinion), and promoting this attitude promote the kind of wasteful and irresponsible computing I was on about.

Now, I’ll give you the link to this basic helper for tuning a PostgreSQL server: https://pgtune.leopard.in.ua/

Will you tell me what are the correct inputs for my homelab (I won’t tell you the hardware, the set-up, the other services running on it, the state of the system, etc)?

And later, when you will distribute your successful container to millions of users, what will you respond to the angry ones that will complain that your software is slow, to no fault of your coding, because they happen to pile up multiple DBs, web servers, application servers, reverse proxies, … on their banana SoCs?

I disagree. You are just entertaining the idea that servers must always and forever be oversized, that’s the definition of wasteful (and environmentally irresponsible). Unless you are firing-up and throwing-away services constantly, nothing justifies this and sparing the relatively low effort it is to deploy your infrastructure knowingly.

Precisely what pre-devops sysadmins were saying when containers were becoming trendy. You are just pushing the complexity elsewhere, and creating novel classes of problems for yourself (keeping your BoM in control and minimal is one of many others that got thrown away)

Self hosting doesn’t mean “being wasteful and letting containers duplicate services”. I want to know which DB application X is using, so I pool it for applications Y and Z.

As someone who’s been using ttrss for decades but would be open to trying something new, what would you say is FreshRSS’ killer feature (and missing killer feature) compared to ttrss?

(Not trying to start a flame war, ttrss feels like a finished project, which is not a bad thing, but I think it’s healthy to wish for more innovation in this space)

I’ve compared the two a while ago, seems to me like slightly different takes around the same core ideas. It’s true that a couple of things in Ansel feel more natural, but it’s not much, and it’s probably not worth the risk (AFAICT the bus factor is one, compat with DT isn’t a goal).

Darktable developers pride themselves for their non-destructive processing pipeline and use it as an excuse for how quirky and inflexible their UX is. I believe they are highly competent on the highly technical bits that ultimately very few people see or understand. Personally I can use it to an extent if I unlearn what other software have taught me over decades of UX conventions.

I think you should give Trilium(Next) Notes a try:

it has the hierarchical notes structure that you are familiar with in obsidian

it has better ways of keeping things organized (attributes can be values or references, can be shared and inherited, which provides a flexible framework for having notes “types” as templates that can be extended, e.g. people vs. colleagues, businesses vs. companies, etc)

it has the concept of note hoisting (which lets you focus on a note and its sub-notes, so other projects/spaces don’t come in the way of autocomplete and placing references), and workspaces that builds further on top of that

it can be used standalone (local client/offline-only, like obsidian) but coupling it with a remote-server opens more interesting use-cases (synching, sharing notes with others by public URLs, one-user/multi-client editing) which gives the best of both worlds (local-first/online-first) and lets you access your personal notes on devices you don’t necessarily own (which obsidian doesn’t). The mobile app story isn’t great (it’s a PWA with limited offline capabilities at the moment), but isn’t worse than the alternatives either (I can’t really work and think long form on a handheld, no matter the editor experience, but perhaps that’s just me).

You need to list out your requirements. What do you want to do? Where do you need your data? Do you care about open source? Self-hosting? Do you have an idea how your content will be organized? Will you ever need to tap into it as data? Etc

Have you tried trilium notes? Not as hyped and polished, but does extraordinarily well IME.

I didn’t like obsidian’s lacking in attributes structuring/typing and the fact that it cannot serve over a web UI (for wherever you cannot install the heavy client or just to share notes via URL), and found trilium notes to be doing that perfectly, and much much more. Highly recommend.

Matrix seemed interesting right until I got to self hosting it. Then, getting to know it from up close, and the absolute trainwreck that the protocol is, made me love XMPP. Matrix has no excuse for being so messy and fragile at this point. You do you, but I decided that it isn’t worth my sysadmin time (especially when something like ejabberd is practically fire and forget).

, and this is just the beginning.

, and this is just the beginning.

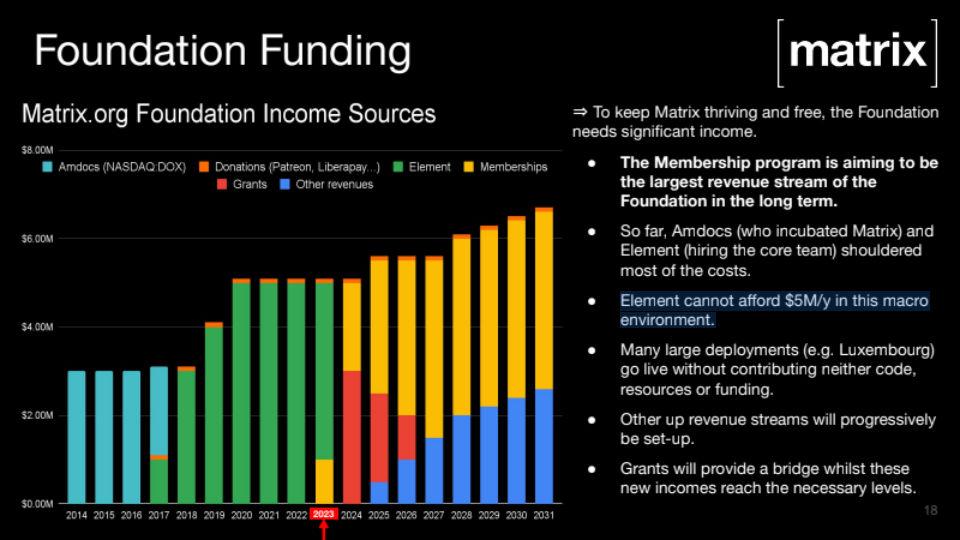

It’s bad omen to criticize an opensource project, I know, but in my eyes Matrix is a big technical and organizational failure, for not having succeeded in stabilizing the protocol after a whole decade of unsuccessful explorations, and for having its leadership consistently fail to define clear goals and steer the project towards them (just get it done and working well before trying to make it “peer to peer” or “in the metaverse”).

If this is the electroshock that Matrix needs to reconsider its design and directions? good for them. If that kills them? Well too bad, but it’s not like they are the only cool kid in town.

Many communities went back to IRC, though, because Matrix still is a hot mess, and the most visible ones which didn’t (Mozilla, KDE, …) are not hosting their own Matrix instance but letting New Vector do that for them, which makes in practice a disproportionate amount of users and accounts be managed by a single organization. I do think it’s better than discord, but barely so.

Don’t ever bring this to the people in charge or you might be told “sorry for that” “but now it’s been fixed, deployed any week now” “you are a liar, this has never been true” and “it doesn’t really matter for the general case” either in the same post or few responses apart. Matrix has been in a permanent state of unstable mess, and the leadership disingenuous attitude made me lose hope that this will ever change. More people should start reading through the fanfare and superlative blog posts, which, admittedly is the thing they do the best and much better than the other projects out there.

I mean, a mechanical timer costs, like, 3 bucks in any currency and lets you set charge and discharge cycles. Add 10 bucks and you have one that you can pilot via REST API.